In 1894, physics seemed complete. Then Kelvin spotted 2 looming “clouds”

- In the late 19th century, physicists came to grips with electricity and magnetism.

- Further discoveries surrounding the atom led some to believe they were close to understanding the “grand underlying principles” of physics in their entirety.

- However, Lord Kelvin and others perceived two “clouds” looming over the horizon of physics.

As the nineteenth century drew to a close, you would have forgiven physicists for hoping that they were on track to understand everything. The universe, according to this tentative picture, was made of particles that were pushed around by fields.

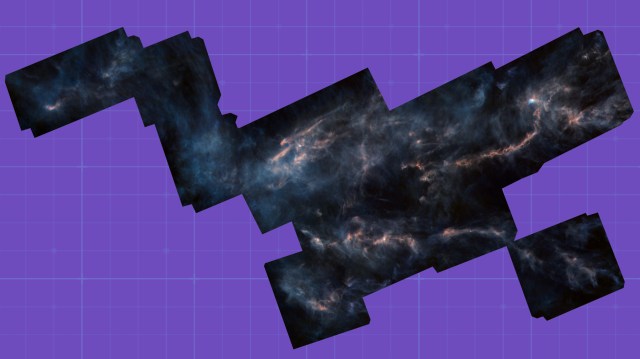

The idea of fields filling space had taken off throughout the 1800s. Earlier, Isaac Newton had presented a beautiful and compelling theory of motion and gravity, and Pierre-Simon Laplace had shown how we could reformulate that theory in terms of a gravitational field stretching between every object in the universe. A field is just something that has a value at each point in space. The value could be a simple number, or it could be a vector or something more complicated, but any field exists everywhere through space.

But if all you cared about was gravity, the field seemed optional — a point of view you could choose to take or not, depending on your preferences. It was equally okay to think as Newton did, directly in terms of the force created on one object by the gravitational pull of others without anything stretching between them.

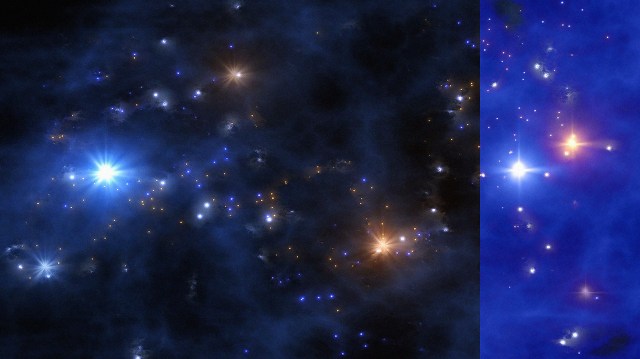

That changed in the nineteenth century, as physicists came to grips with electricity and magnetism. Electrically charged objects exert forces on each other, which is natural to attribute to the existence of an electric field stretching between them. Experiments by Michael Faraday showed that a moving magnet could induce electrical current in a wire without actually touching it, pointing to the existence of a separate magnetic field, and James Clerk Maxwell managed to combine these two kinds of fields into single a theory of electromagnetism, published in 1873. This was an enormous triumph of unification, explaining a diverse set of electrical and magnetic phenomena in a single compact theory. “Maxwell’s equations” bedevil undergraduate physics students to this very day.

One of the triumphant implications of Maxwell’s theory was an understanding of the nature of light. Rather than a distinct kind of substance, light is a propagating wave in the electric and magnetic fields, also known as electromagnetic radiation. We think of electromagnetism as a “force,” and it is, but Maxwell taught us that fields carrying forces can vibrate, and in the case of electric and magnetic fields, those vibrations are what we perceive as light. The quanta of light are particles called photons, so we will sometimes say, “Photons carry the electromagnetic force.” But at the moment we’re still thinking classically.

Take a single charged particle, like an electron. Left sitting by itself, it will have an electric field surrounding it, with lines of force pointing toward the electron. The force will fall off as an inverse-square law, just as in Newtonian gravity.

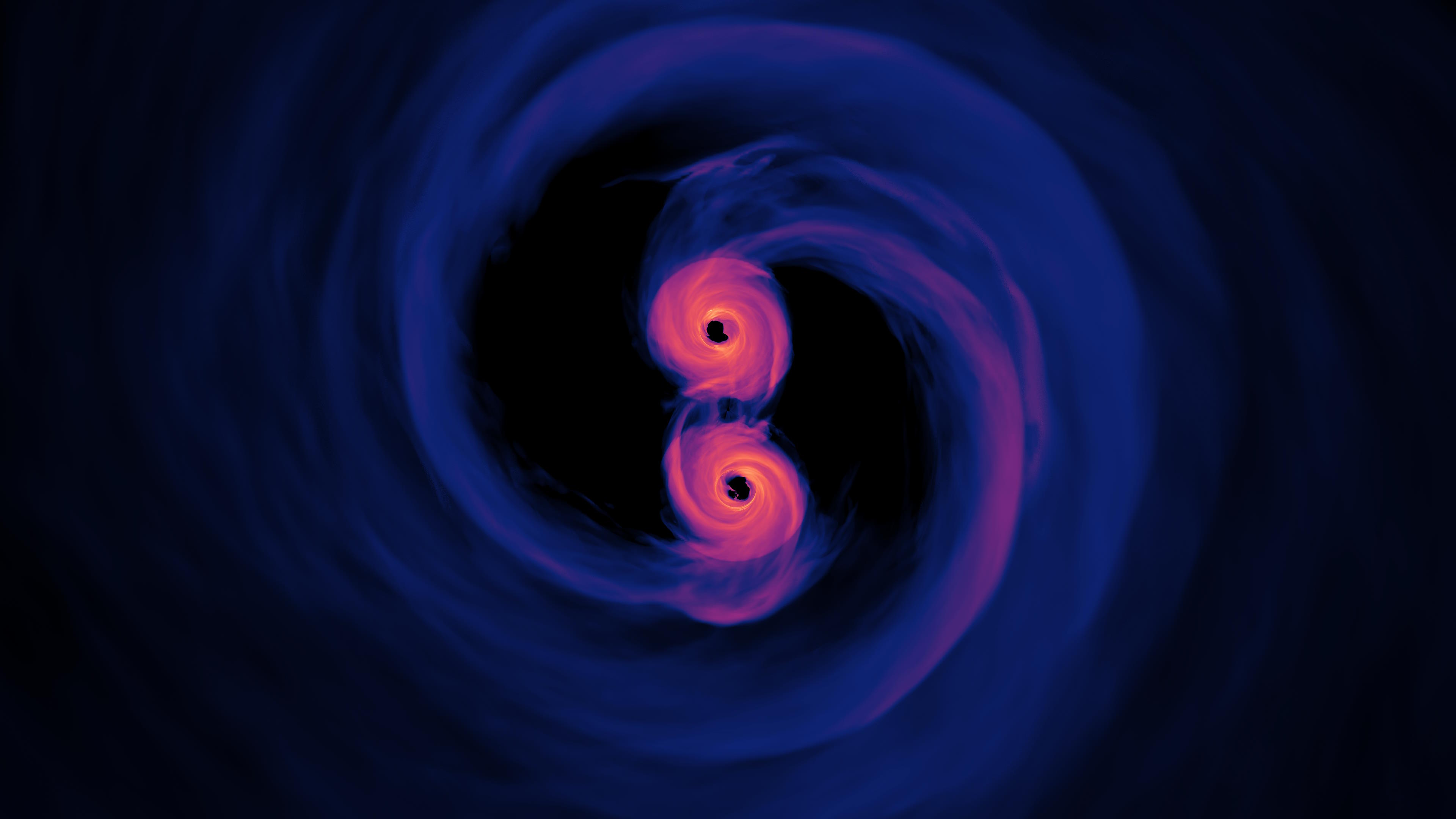

If we move the electron, two things happen: First, a charge in motion creates a magnetic field as well as an electric one. Second, the existing electric field will adjust how it is oriented in space so that it remains pointing toward the particle. And together, these two effects (small magnetic field, small deviation in the existing electric field) ripple outward, like waves from a pebble thrown into a pond.

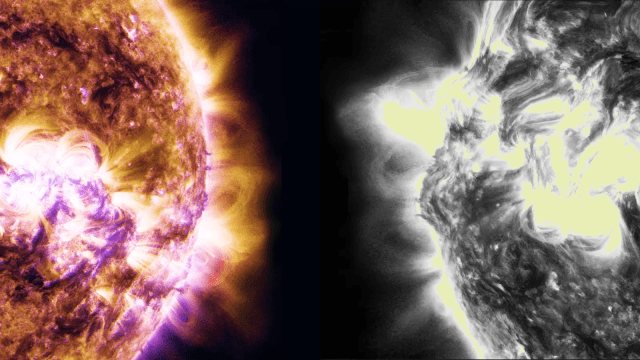

Maxwell found that the speed of these ripples is precisely the speed of light — because it is light. Light, of any wavelength from radio to x-rays and gamma rays, is a propagating vibration in the electric and magnetic fields. Almost all the light you see around you right now has its origin in a charged particle being jiggled somewhere, whether it’s in the filament of a lightbulb or the surface of the sun.

Simultaneously in the nineteenth century, the role of particles was also becoming clear. Chemists, led by John Dalton, championed the idea that matter was made of individual atoms, with one specific kind of atom associated with each chemical element. Physicists belatedly caught on once they realized that thinking of gases as collections of bouncing atoms could explain things like temperature, pressure, and entropy.

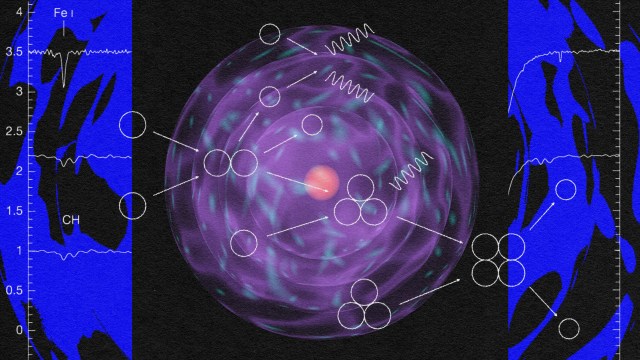

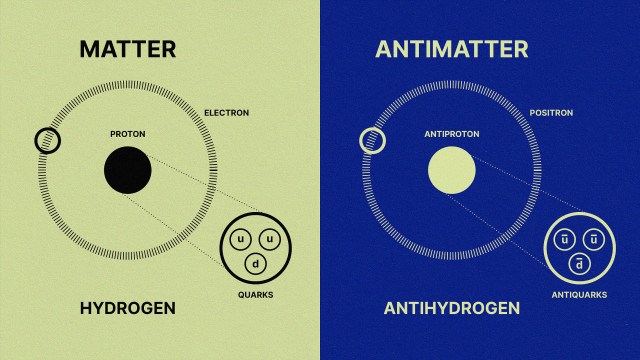

But the term atom, borrowed from the ancient Greek idea of an indivisible elementary unit of matter, turned out to be a bit premature. Though they are the building blocks of chemical elements, modern-day atoms are not indivisible. A quick-and-dirty overview, with details to be filled in later: Atoms consist of a nucleus made of protons and neutrons surrounded by orbiting electrons. Protons have a positive electrical charge, neutrons have zero charge, and electrons have a negative charge. We can make a neutral atom if we have equal numbers of protons and electrons since their electrical charges will cancel each other out.

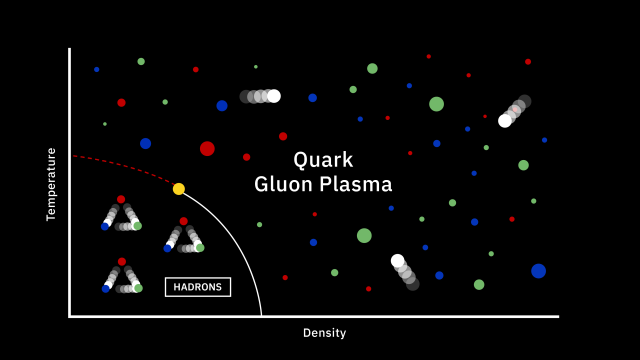

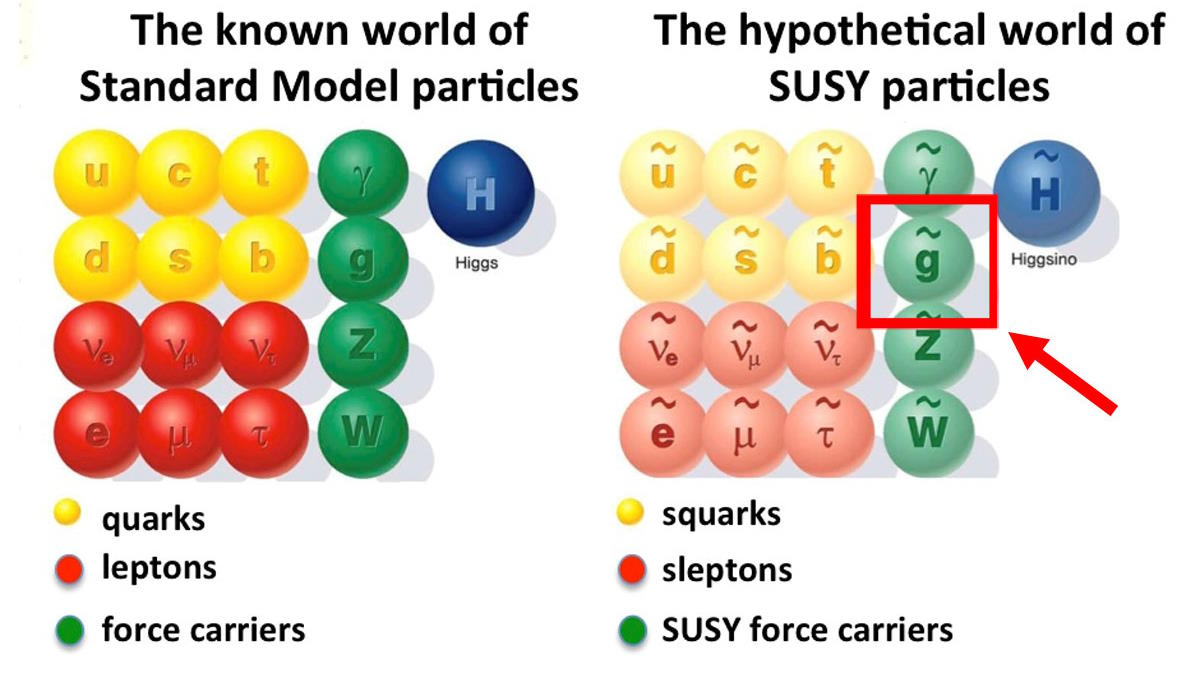

These days we know that protons and neutrons are themselves made of smaller particles called quarks, which are held together by gluons, but there was no hint of that in the early 1900s.

Sean Carroll

Protons and neutrons have roughly the same mass, with neutrons being just a bit heavier, but electrons are much lighter, about 1/1,800th the mass of a proton. So most of the mass in a person or another macroscopic object comes from the protons and neutrons. The lightweight electrons are more able to move around and are therefore responsible for chemical reactions as well as the flow of electricity. These days we know that protons and neutrons are themselves made of smaller particles called quarks, which are held together by gluons, but there was no hint of that in the early 1900s.

This picture of atoms was put together gradually. Electrons were discovered in 1897 by British physicist J. J. Thompson, who measured their charge and established that they were much lighter than atoms. So somehow there must be two components in an atom: the light-weight, negatively charged electrons and a heavier, positively charged piece. A few years later Thompson suggested a picture in which tiny electrons floated within a larger, positively charged volume. This came to be called the “plum pudding model,” with electrons playing the role of the plums.

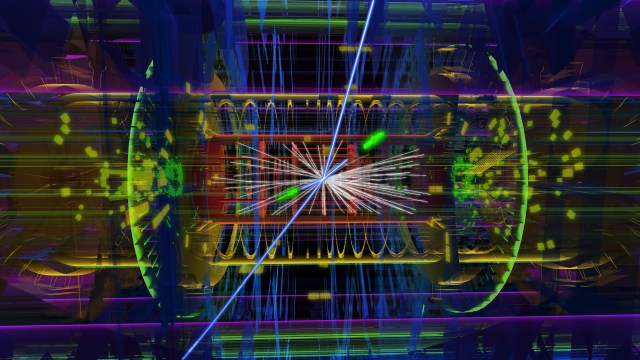

The plum pudding model didn’t flourish for long. A famous experiment by Ernest Rutherford, Hans Geiger, and Ernest Marsden shot alpha particles (now known to be nuclei of helium atoms) at a thin sheet of gold foil. The expectation was that they would mostly pass right through, with their trajectories slightly deflected if they happened to pass right through an atom and interact with the electrons (the plums) or the diffuse positively charged blob (the pudding). Electrons are too light to disturb the alpha particles’ trajectories, and a spread-out positive charge would be too diffuse to have much effect.

But what happened was, while most of the particles did indeed zip through unaffected, some bounced off at wild angles, even straight back. That could only happen if there was something heavy and substantial for the particles to carom off of. In 1911 Rutherford correctly explained this result by positing that the positive charge was concentrated in a massive central nucleus. When an incoming alpha particle was lucky enough to score a direct hit on the small but heavy nucleus, it would be deflected at a sharp angle, which is what was observed. In 1920 Rutherford proposed the existence of protons (which were just hydrogen nuclei, so had already been discovered), and in 1921 he theorized the existence of neutrons (which were eventually discovered in 1932).

Other physicists, starting with Maxwell himself, recognized that the known behavior of collections of particles and waves didn’t always accord with our classical expectations.

Sean Carroll

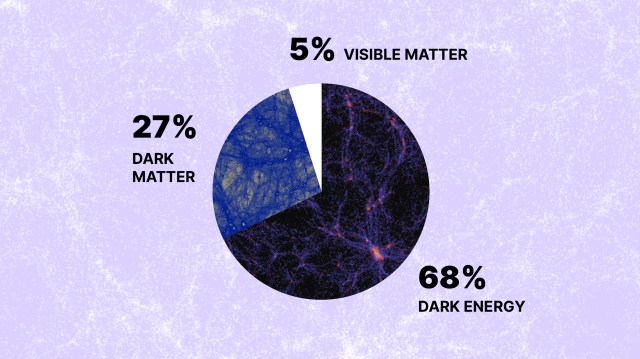

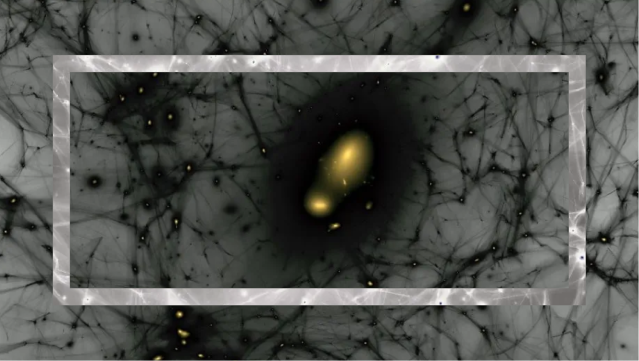

So far, so good, thinks our imagined fin de siècle physicist. Matter is made of particles, the particles interact via forces, and those forces are carried by fields. The entire mechanism would run according to rules established by the framework of classical physics. For particles this is pretty familiar: We specify the positions and the momenta of all the particles, then use one of our classical techniques (Newton’s laws or their equivalent) to describe their dynamics. Fields work in essentially the same way, except that the “position” of a field is its value at every point in space, and its “momentum” is how fast it’s changing at every point. The overall classical picture applies in either case.

The suspicion that physics was close to being all figured out wasn’t entirely off-base. Albert Michelson, at the dedication of a new physics laboratory at the University of Chicago in 1894, proclaimed, “It seems probable that most of the grand underlying principles [of physics] have been firmly established.”

He was quite wrong.

But he was also in the minority. Other physicists, starting with Maxwell himself, recognized that the known behavior of collections of particles and waves didn’t always accord with our classical expectations. William Thomson, Lord Kelvin, is often the victim of a misattributed quote: “There is nothing new to be discovered in physics now. All that remains is more and more precise measurement.” His real view was the opposite. In a lecture in 1900, Thomson highlighted the presence of two “clouds” looming over physics, one of which was eventually to be dispersed by the formulation of the theory of relativity, the other by the theory of quantum mechanics.